In recent years, Large Language Models (LLMs) like GPT-4 and its successors have made a big impact in the AI world. The growth of transformer powered models in AI has led to significant progress, especially in natural language processing. However, there is growing debate about whether these models can truly reason. This article examines the reasoning limitations of current transformer architectures and suggests a new framework to improve AI abilities.

The Current State of Reasoning in Transformers

While transformers have shown remarkable proficiency in generating human-like text, they fundamentally operate on statistical patterns learned from vast datasets. This raises crucial questions about their ability to perform reasoning tasks effectively.

Example: The Challenge of Mathematical Operations

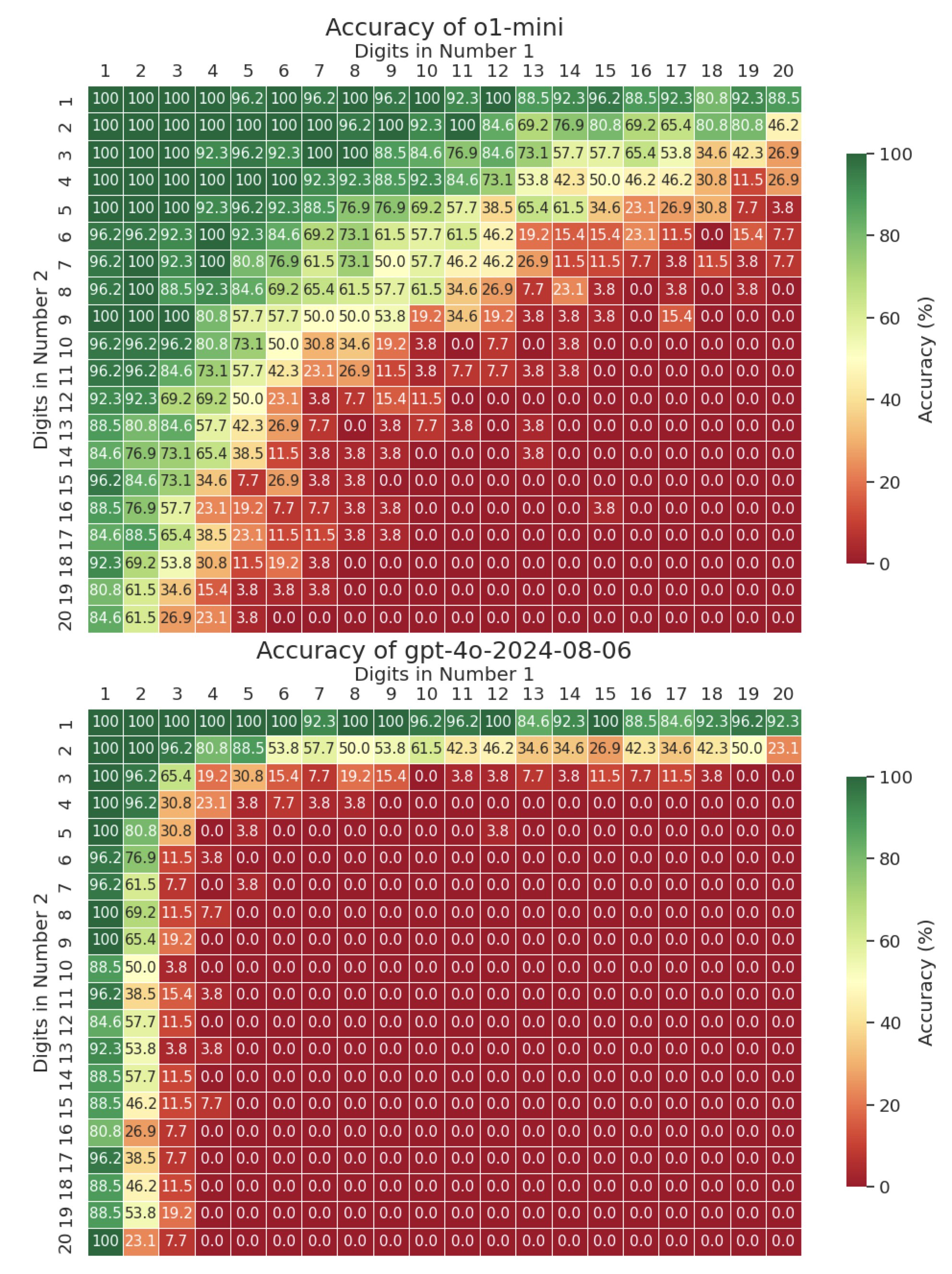

Consider a recent experiment evaluating the accuracy of transformer models in basic multiplication tasks. The results indicate that these models struggle significantly, achieving high accuracy only in specific cases. For instance, while they may accurately multiply by 1, their performance drastically declines as complexity increases, illustrating a lack of robust reasoning capabilities.

Limitations of Current Transformer Models

Understanding the limitations of transformers is essential for advancing AI research. Key points to consider include:

Lack of True Reasoning: Current transformers do not possess the logical reasoning abilities that traditional expert systems offer, which rely on formal logic for induction, abduction, and deduction.

Probabilistic Outputs: Transformers generate outputs based on learned probabilities, which do not always align with common human intuition or reasoning patterns.

Inability to Generalise: Many reasoning tasks reveal that transformers are often unable to generalise learned information to novel situations effectively, resulting in errors and misunderstandings.

The Need for an Advanced Architecture

To address these limitations, we must envision an architecture that integrates transformers with additional reasoning capabilities. Here are some elements that can enhance this framework:

Modular Design: Incorporate specialized runtimes capable of handling complex reasoning tasks, such as symbolic logic or constraint satisfaction problems. This modularity allows different components to work together seamlessly, leveraging their unique strengths.

Dynamic Resource Allocation: Implement a dynamic resource allocation system that optimizes performance based on task demands, ensuring efficient use of computational resources.

Enhanced Memory Management: Use advanced memory architectures to support various reasoning tasks, enabling the system to retrieve and manipulate information dynamically.

Multi-Algorithmic Approach: Allow the integration of diverse algorithms, facilitating hybrid reasoning strategies that combine probabilistic and logical approaches.

Feedback Loops for Continuous Learning: Establish feedback mechanisms that enable the system to learn from each component's outputs, leading to continuous improvement and adaptation to user needs.

Implications for AI Development

Understanding the limitations of transformer reasoning capabilities has broader implications:

Realistic Expectations: Developers and users must have realistic expectations of what transformers can achieve, particularly in critical decision-making scenarios.

Ethical Considerations: Misinterpretations of AI capabilities can lead to ethical dilemmas and challenges in accountability, particularly in fields like healthcare or law enforcement.

Advancing AI Research: Recognizing these limitations should encourage researchers to explore alternative models and hybrid architectures, fostering innovation and new methodologies in AI development.

Conclusion

As we evaluate the reasoning capabilities of transformer models, it's clear that while they are powerful tools for natural language processing, they lack the true reasoning abilities of traditional expert systems. By understanding their limitations and envisioning advanced architectures that incorporate additional reasoning components, we can create more robust AI systems capable of tackling complex problems.

Ultimately, the goal is to harness the strengths of transformers while acknowledging when to integrate other subsystems that can provide reliable reasoning. As AI systems continue to evolve, a nuanced understanding of their capabilities and limitations will be essential for leveraging their full potential in our increasingly complex world.